I have some difficulties understanding the difference between Q-learning and SARSA. Here (What are the differences between SARSA and Q-learning?) the following updating formulas are given:

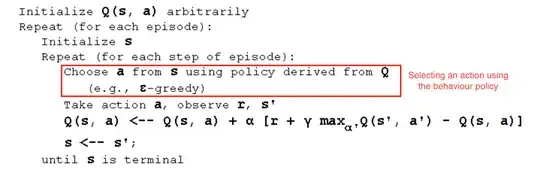

Q-Learning

$$Q(s,a) = Q(s,a) + \alpha (R_{t+1} + \gamma \max_aQ(s',a) - Q(s,a))$$

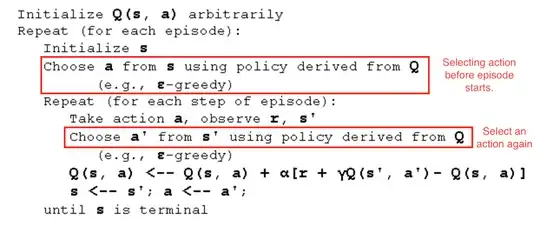

SARSA

$$Q(s,a) = Q(s,a) + \alpha (R_{t+1} + \gamma Q(s',a') - Q(s,a))$$

I know that SARSA is for on-policy learning while Q-learning is off-policy learning. So, in Q-learning, the epsilon-greedy policy (or epsilon-soft or softmax policy) is chosen for selecting the actions and the greedy policy is chosen for updating the Q-values. In SARSA the epsilon-greedy policy (or epsilon-soft or softmax policy) is chosen for selecting the actions and for updating the Q function.

So, actually, I have a question on that:

On this website (https://www.cse.unsw.edu.au/~cs9417ml/RL1/algorithms.html) there is written for SARSA

As you can see, there are two action selection steps needed, for determining the next state-action pair along with the first.

What is meant by two action selections? Normally you can only select one action per iteration. The other "selection" should be for the update.