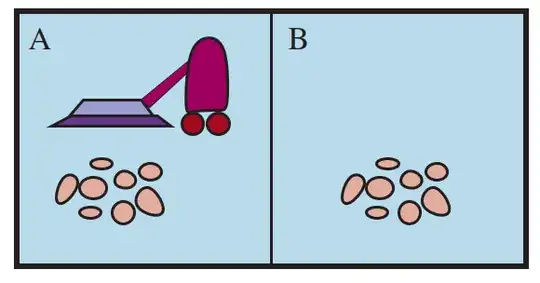

This is the vacuum cleaner example of the book "Artificial intelligence: A Modern Approach" (4th edition).

Consider the simple vacuum-cleaner agent that cleans a square if it is dirty and moves to the other square if not; this is the agent function tabulated as follows.

Percept sequence Action

[A,Clean] Right

[A,Dirty] Suck

[B,Clean] Left

[B,Dirty] Suck

[A,Clean], [A,Clean] Right

[A,Clean], [A,Dirty] Suck

. .

. .

[A,Clean], [A,Clean], [A,Clean] Right

[A,Clean], [A,Clean], [A,Dirty] Suck

. .

.

.

The characteristics of environment and performance function are as follow:

The performance measure awards one point for each clean square at each time step, over a "lifetime" of 1000 time steps.

The "geography" of the environment is known a priori (the above figure) but the dirt distribution and the initial location of the agent are not. Clean squares stay clean and sucking cleans the current square. The Right and Left actions move the agent one square except when this would take the agent outside the environment, in which case the agent remains where it is.

The only available actions are Right, Left, and Suck.

The agent correctly perceives its location and whether that location contains dirt.

In the book, it is stated that under these circumstances the agent is indeed rational. But I do not understand such percept sequence that consists of multiple [A, clean] percepts, e.g. {[A, clean], [A, clean]}. In my opinion, after first [A, clean], the agent must be gone to the right square; So, the sequence {[A, clean], [A, clean]} will never be perceived.

In other words, the second perception of [A, clean] is the consequence of acting left or suck action after perceiving the first [A, clean]. Therefore, we can conclude the agent is not rational.

Please, help me to understand it.