I need to build a hand detector that recognizes the chord played by a hand on a guitar.

I read this article Static Hand Gesture Recognition using Convolutional Neural Network with Data Augmentation that looks like what I need (hand gesture recognition).

I think my task is (from my point of view) a little more difficult than that in the paper, because I think it is more difficult to distinguish between two chords than between a punch and a palm.

What I don't understand clearly is how to choose the best parameters for this more complex task: is it better to have more/less convolutional layers? A higher or lower number of poolings? Max or avg pooling?

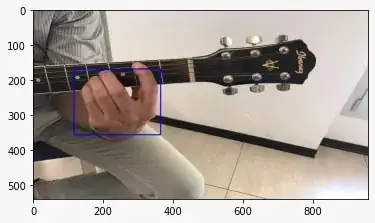

The input will be more or less like this one:

There will be a first net (MobileNetV2 trained on EgoHands) that will find the bounding box, crops the image and then passes the saturated blending between the original one and Frei&Chen edges to the second net (unfortunately I don't have a processed picture yet, I will post an example as soon as I get it)