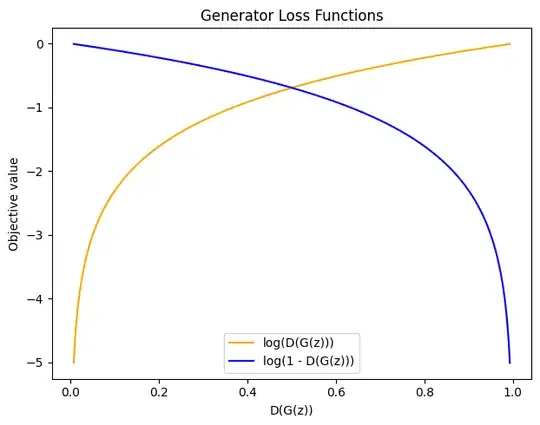

It has been mentioned in the research paper titled Generative Adversarial Nets that generator need to maximize the function $\log D(G(z))$ instead of minimizing $\log(1 −D(G(z)))$ since the former provides sufficient gradient than latter.

$$\min_G \max_DV(D, G) = \mathbb{E}_{x ∼ P_{data}}[\log D(x)] + \mathbb{E}_{z ∼ p_z}[log (1 - D(z))]$$ In practice, the above equation may not provide sufficient gradient for $G$ to learn well. Early in learning, when $G$ is poor, $D$ can reject samples with high confidence because they are clearly different from the training data. In this case, $\log(1 −D(G(z)))$ saturates. Rather than training $G$ to minimize $\log(1 −D(G(z)))$ we can train G to maximize $\log D(G(z))$. This objective function results in the same fixed point of the dynamics of $G$ and $D$ but provides much stronger gradients early in learning.

A gradient is a vector containing the partial derivatives of outputs w.r.t inputs. At a particular point, the gradient is a vector of real numbers. These gradients are useful in the training phase by providing direction-related information and the magnitude of step in the opposite direction. This is my understanding regarding gradients.

What is meant by sufficient or strong gradient? Is it the norm of the gradient or some other measure on the gradient vector?

If possible, please show an example of strong and weak gradients with numbers so that I can quickly understand.