Here is how I managed to construct a reward function in one of my projects, where I trained an RL model for a self-driving robot that has only a single camera to navigate through a tunnel:

$$

R = \left\{

\begin{array}{ll}

d_m - 3 - \left| d_l - d_r \right| & \text{if not terminal state} \\

-100 & \text{otherwise}

\end{array}

\right.

$$

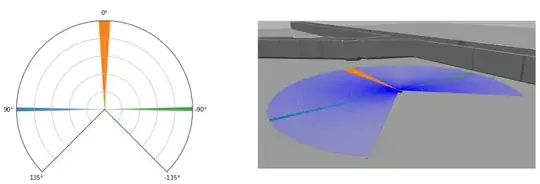

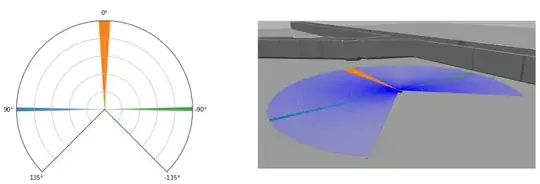

where $d_m$ is the middle distance, $d_l$ is the left distance and $d_r$ is the right distance, and $d_m, d_l, d_r \in [0, 10]$. To get this information, the agent has a laser sensor. The agent does not directly observe these distances. Instead, it gets a real number as the reward signal that indicates how good the agent is performing an action and tries to map the camera view to it. This function is designed so that the agent should stay in the middle of the tunnel, $-\left|d_l - d_r\right|$, and has to avoid head-on collisions, $d_m - 3$. Thus, the highest possible reward is $R = 10 - 3 - \left| 10 - 10 \right| = 7$

Basically, you can use any number of parameters in your reward function as long as it accurately reflects the goal the agent needs to achieve. For instance, I could penalize the agent for frequent steering on straight sections so that it drives smoothly.