I am working on a project where I am trying to detect and localize forgeries in images. I am using the CASIA v2 dataset and using Unet model for the task. I have the binary masks of all the images in the CASIA v2 dataset. The metric I am using for the model are F1 score.

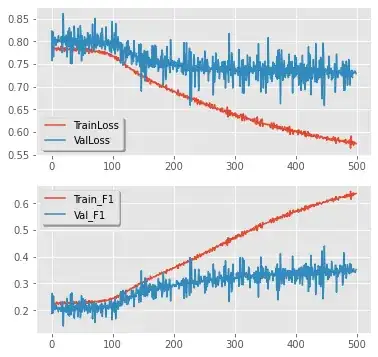

The issue with the model is that it is highly overfitting, the validation loss plateaus up.

Batch size is 128 and Learning rate is 0.000001. Image size is 128 x 128.

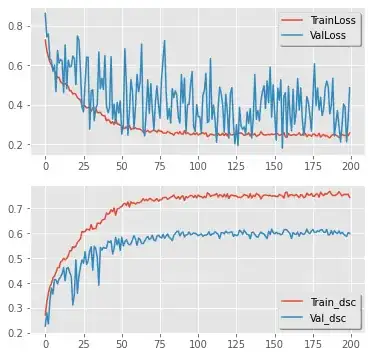

Updated graph for batch size 16 with the changes mentioned by @spb is as follows:

I have also tried using Learning rate scheduler to decrease the learning rate(starting with high learning rate) on plateaus but that didn't help much.

I am also using the package Albumentations for data augmentation of both the images and its masks. I load the images and the masks and then apply the augmentations and save the augmented images and masks in a separate arrays and finally extend the original images and masks with the augmented images and masks. So technically I have original plus the augmented images and masks that I use for training the model. The augmentations I am using are:

Augment = A.Compose([

A.VerticalFlip(p=0.5),

A.RandomRotate90(p=0.5),

A.HorizontalFlip(p = 0.5)

])

I have split the dataset into 70% Training, 20% Validation and 10% for testing. Here is a snippet of my model. Updated Code below

def conv2d_block(input_tensor, n_filters, kernel_size = 3, batchnorm = True):

"""Function to add 2 convolutional layers with the parameters passed to it"""

# first layer

x = Conv2D(filters = n_filters, kernel_size = (kernel_size, kernel_size),\

kernel_initializer = 'he_normal', padding = 'same')(input_tensor)

if batchnorm:

x = BatchNormalization()(x)

x = Activation('relu')(x)

second layer

x = Conv2D(filters = n_filters, kernel_size = (kernel_size, kernel_size),

kernel_initializer = 'he_normal', padding = 'same')(input_tensor)

if batchnorm:

x = BatchNormalization()(x)

x = Activation('relu')(x)

return x

def get_unet(input_img, n_filters = 16, dropout = 0.1, batchnorm = True):

"""Function to define the UNET Model"""

Contracting Path

c1 = conv2d_block(input_img, n_filters * 1, kernel_size = 3, batchnorm = batchnorm)

p1 = MaxPooling2D((2, 2))(c1)

#p1 = Dropout(dropout)(p1)

c2 = conv2d_block(p1, n_filters * 2, kernel_size = 3, batchnorm = batchnorm)

p2 = MaxPooling2D((2, 2))(c2)

#p2 = Dropout(dropout)(p2)

c3 = conv2d_block(p2, n_filters * 4, kernel_size = 3, batchnorm = batchnorm)

p3 = MaxPooling2D((2, 2))(c3)

#p3 = Dropout(dropout)(p3)

c4 = conv2d_block(p3, n_filters * 8, kernel_size = 3, batchnorm = batchnorm)

p4 = MaxPooling2D((2, 2))(c4)

#p4 = Dropout(dropout)(p4)

c5 = conv2d_block(p4, n_filters * 16, kernel_size = 3, batchnorm = batchnorm)

p5 = MaxPooling2D((2, 2))(c5)

#p5 = Dropout(dropout)(p5)

c6 = conv2d_block(p5, n_filters = n_filters * 32, kernel_size = 3, batchnorm = batchnorm)

Expansive Path

u7 = Conv2DTranspose(n_filters * 16, (3, 3), strides = (2, 2), padding = 'same')(c6)

u7 = concatenate([u7, c5])

u7 = Dropout(dropout)(u7)

c7 = conv2d_block(u7, n_filters * 16, kernel_size = 3, batchnorm = batchnorm)

u8 = Conv2DTranspose(n_filters * 8, (3, 3), strides = (2, 2), padding = 'same')(c7)

u8 = concatenate([u8, c4])

u8 = Dropout(dropout)(u8)

c8 = conv2d_block(u8, n_filters * 8, kernel_size = 3, batchnorm = batchnorm)

u9 = Conv2DTranspose(n_filters * 4, (3, 3), strides = (2, 2), padding = 'same')(c8)

u9 = concatenate([u9, c3])

u9 = Dropout(dropout)(u9)

c9 = conv2d_block(u9, n_filters * 4, kernel_size = 3, batchnorm = batchnorm)

u10 = Conv2DTranspose(n_filters * 2, (3, 3), strides = (2, 2), padding = 'same')(c9)

u10 = concatenate([u10, c2])

u10 = Dropout(dropout)(u10)

c10 = conv2d_block(u10, n_filters * 2, kernel_size = 3, batchnorm = batchnorm)

u11 = Conv2DTranspose(n_filters * 1, (3, 3), strides = (2, 2), padding = 'same')(c10)

u11 = concatenate([u11, c1])

u11 = Dropout(dropout)(u11)

c11 = conv2d_block(u11, n_filters * 1, kernel_size = 3, batchnorm = batchnorm)

outputs = Conv2D(1, (1, 1), activation='sigmoid')(c11)

model = Model(inputs=[input_img], outputs=[outputs])

return model

Currently I am not using the dropout as it leads to higher validation loss plateaus in my case.

The F1 score and F1 loss I am calculating are as follows

def f1(y_true, y_pred):

y_pred = K.round(y_pred)

tp = K.sum(K.cast(y_truey_pred, 'float'), axis=0)

tn = K.sum(K.cast((1-y_true)(1-y_pred), 'float'), axis=0)

fp = K.sum(K.cast((1-y_true)y_pred, 'float'), axis=0)

fn = K.sum(K.cast(y_true(1-y_pred), 'float'), axis=0)

p = tp / (tp + fp + K.epsilon())

r = tp / (tp + fn + K.epsilon())

f1 = 2pr / (p+r+K.epsilon())

f1 = tf.where(tf.is_nan(f1), tf.zeros_like(f1), f1)

return K.mean(f1)

def f1_loss(y_true, y_pred):

tp = K.sum(K.cast(y_truey_pred, 'float'), axis=0)

tn = K.sum(K.cast((1-y_true)(1-y_pred), 'float'), axis=0)

fp = K.sum(K.cast((1-y_true)y_pred, 'float'), axis=0)

fn = K.sum(K.cast(y_true(1-y_pred), 'float'), axis=0)

p = tp / (tp + fp + K.epsilon())

r = tp / (tp + fn + K.epsilon())

f1 = 2pr / (p+r+K.epsilon())

f1 = tf.where(tf.is_nan(f1), tf.zeros_like(f1), f1)

return 1 - K.mean(f1)

I have also tried using other losses like focal_tversky but have a similar result.

What can be the issue and how can I solve it?

Is it

- Issue with my data like presence of outliers

- Model related issue

- Batch size and Learning rate related issue

- Or anything else?

Please your help in this regard is really appreciated as I really need to solve it soon.