Having worked with neural networks for about half a year, I have experienced first-hand what are often claimed as their main disadvantages, i.e. overfitting and getting stuck in local minima. However, through hyperparameter optimization and some newly invented approaches, these have been overcome for my scenarios. From my own experiments:

Dropout seems to be a very good regularization method.

Batch normalization eases training and keeps signal strength consistent across many layers.

Adadelta consistently reaches very good optima

I have experimented with scikit-learn's implementation of SVM alongside my experiments with neural networks, but I find the performance to be very poor in comparison, even after having done grid-searches for hyperparameters. I realize that there are countless other methods, and that SVM's can be considered a sub-class of NN's, but still.

So, to my question:

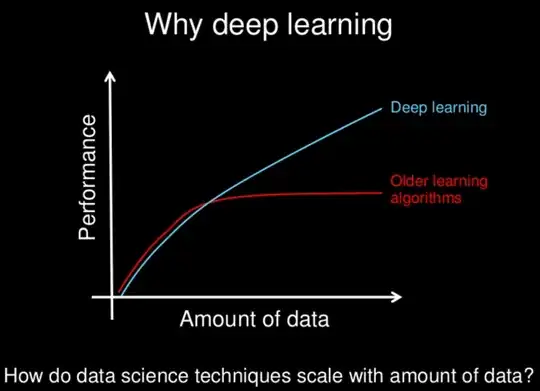

With all the newer methods researched for neural networks, have they slowly - or will they - become "superior" to other methods? Neural networks have their disadvantages, as do others, but with all the new methods, have these disadvantages been mitigated to a state of insignificance?

I realize that oftentimes "less is more" in terms of model complexity, but that too can be architected for neural networks. The idea of "no free lunch" forbids us to assume that one approach always will reign superior. It's just that my own experiments - along with countless papers on awesome performances from various NN's - indicate that there might be, at the least, a very cheap lunch.