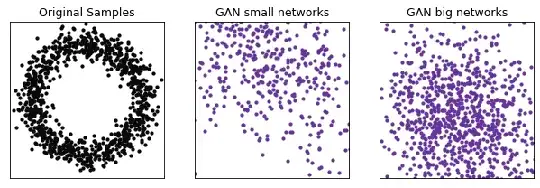

I am working with generative adversarial networks (GANs) and one of my aims at the moment is to reproduce samples in two dimensions that are distributed according to a circle (see animation). When using a GAN with small networks (3 layers with 50 neurons each), the results are more stable than with bigger layers (3 layers with 500 neurons each). All other hyperparameters are the same (see details of my implementation below).

I am wondering if anyone has an explanation for why this is the case. I could obviously try to tune the other hyperparameters to get good performance but would be interested in knowing if someone has heuristics about what is needed to change whenever I change the size of the networks.

Network/Training parameters

I use PyTorch with the following settings for the GAN:

Networks:

- Generator/Discriminator Architecture (all dense layers): 100-50-50-50-2 (small); 100-500-500-500-2 (big)

- Dropout: p=0.4 for generator (except last layer), p=0 for discriminator

- Activation functions: LeakyReLU (slope 0.1)

Training:

- Optimizer: Adam

- Learning Rate: 1e-5 (for both networks)

- Beta1, Beta2: 0.9, 0.999

- Batch size: 50