I am quite new to GAN and I am reading about WGAN vs DCGAN.

Relating to the Wasserstein GAN (WGAN), I read here

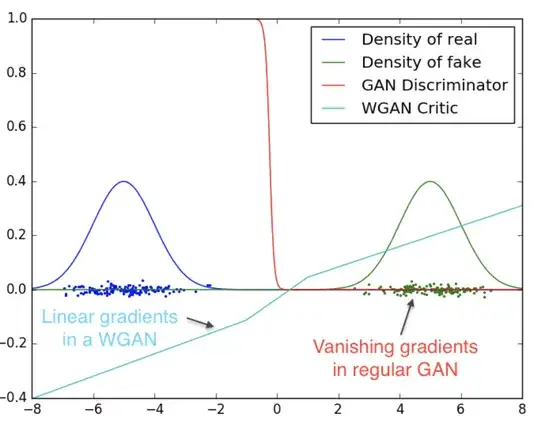

Instead of using a discriminator to classify or predict the probability of generated images as being real or fake, the WGAN changes or replaces the discriminator model with a critic that scores the realness or fakeness of a given image.

In practice, I don't understand what the difference is between a score of the realness or fakeness of a given image and a probability that the generated images are real or fake.

Aren't scores probabilities?