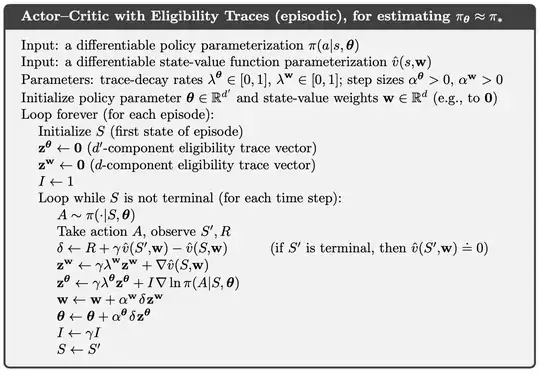

The pseudocode below is taken from Barto and Sutton's "Reinforcement Learning: an introduction". It shows an actor-critic implementation with eligibility traces. My question is: if I set $\lambda^{\theta}=1$ and replace $\delta$ with the immediate reward $R_t$, do I get a backwards implementation of REINFORCE?

Asked

Active

Viewed 340 times