I'm new to deep learning. I wanted to know: do we use pre-processing in deep learning? Or it is only used in machine learning. I searched for it and its methods on the internet, but I didn't find a suitable answer.

3 Answers

Yes, sure, data pre-processing is also done in deep learning. For example, we often normalize (or scale) the inputs to neural networks. If the inputs are images, we often resize them so that they all have the same dimensions. Of course, the pre-processing step that you apply depends on your data, neural network, and task.

Here or here are two examples of implementations that perform a pre-processing step (normalization in the second case). You can find more explanations and examples here and probably here too.

- 42,615

- 12

- 119

- 217

Adding to nbro's solution, the less you have to normalize/balance/preprocess/augment, the better, e.g. because then you know for sure that the accuracy is the achievement of the model rather than data combing. For example, if you can achieve the same accuracy using two approaches (e.g. with the image dataset):

- for each image, subtract global mean, divide by global standard deviation

- 1)+random flips, random crops, color jitter, etc,

then 1), if you can achieve a comparable accuracy, is a better solution, as the model is more general. The same refers to the balancing of the data - if you can train a good model without it, it's an additional strength.

- 146

- 3

Preprocessing in deep learning is about how to make data compatible to the model architecture's input tensor shape and output tensor shape.

So the main critical aspect of preprocessing in deep learning is how to encode the data into the tensor with a specified shape of model input so that it can be fed into the deep learning model.

As you already know, deep learning is intended to avoid any human intervention or feature engineering (opposite what traditional machine learning does). Therefore, normalization and feature scaling in deep learning are optional things, it's because they are considered as feature engineering. Unless this is the requirement to make compatibility shape of model input. Mainly if your data contains physical units that represent the real world. You can just let the model train that real-world thing.

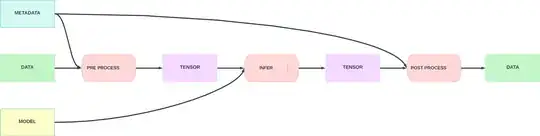

When you encode the data to the tensor, you might lose some information such as metadata or index mapping, this is common mistake. You should keep the metadata or hard coded it into code.

The metadata supposed to decode the tensor back to the data that represent original data in post-processing step or inferring process.

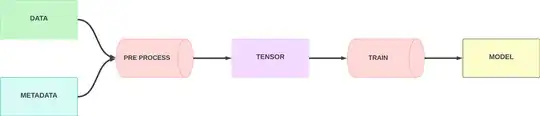

So, this is the overall deep learning pipeline:

Training

Inference

- 800

- 3

- 10