I am new to Neural Networks and my questions are still very basic. I know that most of neural networks allow and even ask user to chose hyper-parameters like:

- amount of hidden layers

- amount of neurons in each layer

- amount of inputs and outputs

- batches and epochs steps and some stuff related to back-propagation and gradient descent

But as I keep reading and youtubing, I understand that there are another important "mini-parameters" such as:

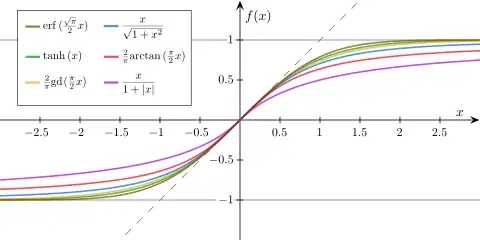

activation functions type

activation functions fine-tuning (for example shift and slope of sigmoid)

whether there is an activation funciton in the output

range of weights (are they from zero to one or from -1 to 1 or -100 to +100 or any other range)

are the weights normally distributed or they just random

etc...

Actually the question is:

Part a:

Do I understand right that most of neural networks do not allow to change those "mini-parameters", as long as you are using "readymade" solutions? In other words if I want to have an access to those "mini-parameters" I need to program the whole neural network by myself or there are "semi-finished products"

Part b:(edited) For someone who uses neural network as an everyday routine tool to solve problems(Like data scientist), How common and how often do those people deal with fine tuning things which I refer to as "mini-parameters"? Or those parameters are usually adjusted by a neural network developers who create the frameworks like pytorch, tensorflow etc?

Thank you very much