In the average reward setting, the quality of a policy is defined as:

$$ r(\pi) = \lim_{h\to\infty}\frac{1}{h} \sum_{j=1}^{h}E[R_j] $$

When we reach the steady state distribution, we can write the above equation as follows:

$$ r(\pi) = \lim_{t\to\infty}E[R_t | A \sim \pi] $$

We can use the incremental update method to find $r(\pi)$:

$$ r(\pi) = \frac{1}{t} \sum_{j=1}^{t} R_j = \bar R_{t-1} + \beta (R_t - \bar R_{t-1})$$

where $ \bar R_{t-1}$ is the estimate of the average reward $r(\pi)$ at time step $t-1$.

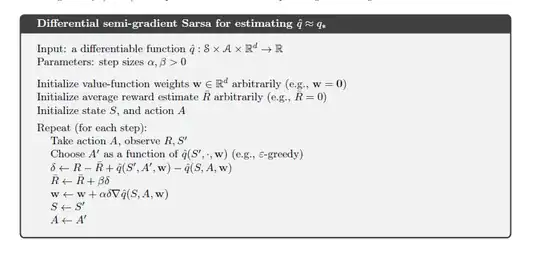

We use this incremental update rule in the SARSA algorithm:

Now, in this above algorithm, we can see that the policy will change with respect to time. But to calculate the $r(\pi)$, the agent should follow the policy $\pi$ for a long period of time. Then how we are using $r(\pi)$ if the policy changes with respect to time?