When I studied neural networks, parameters were learning rate, batch size etc. But even GPT3's ArXiv paper does not mention anything about what exactly the parameters are, but gives a small hint that they might just be sentences.

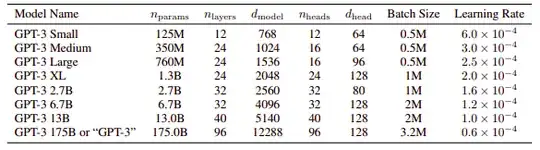

Even tutorial sites like this one start talking about the usual parameters, but also say "model_name: This indicates which model we are using. In our case, we are using the GPT-2 model with 345 million parameters or weights". So are the 175 billion "parameters" just neural weights? Why then are they called parameters? GPT3's paper shows that there are only 96 layers, so I'm assuming it's not a very deep network, but extremely fat. Or does it mean that each "parameter" is just a representation of the encoders or decoders?

An excerpt from this website shows tokens:

In this case, there are two additional parameters that can be passed to gpt2.generate(): truncate and include_prefix. For example, if each short text begins with a <|startoftext|> token and ends with a <|endoftext|>, then setting prefix='<|startoftext|>', truncate=<|endoftext|>', and include_prefix=False, and length is sufficient, then gpt-2-simple will automatically extract the shortform texts, even when generating in batches.

So are the parameters various kinds of tokens that are manually created by humans who try to fine-tune the models? Still, 175 billion such fine-tuning parameters is too high for humans to create, so I assume the "parameters" are auto-generated somehow.

The attention-based paper mentions the query-key-value weight matrices as the "parameters". Even if it is these weights, I'd just like to know what kind of a process generates these parameters, who chooses the parameters and specifies the relevance of words? If it's created automatically, how is it done?