I'm trying to understand the DDPG algorithm using Keras

I found the site and started analyzing the code, I can't understand 2 things.

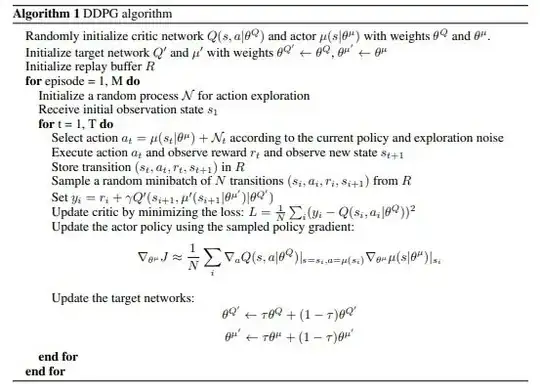

The algorithm used to write the code presented on the page

In the algorithm image, updating the critic's network does not require gradient

But the gradient is implemented in the code, why?

with tf.GradientTape() as tape:

target_actions = target_actor(next_state_batch)

y = reward_batch + gamma * target_critic([next_state_batch, target_actions])

critic_value = critic_model([state_batch, action_batch])

critic_loss = tf.math.reduce_mean(tf.math.square(y - critic_value))

critic_grad = tape.gradient(critic_loss, critic_model.trainable_variables)

critic_optimizer.apply_gradients(zip(critic_grad, critic_model.trainable_variables))

The second question is why in the photo of the algorithm when calculating the actor's policy gradient are 2 gradients multiplied by themselves and in the code only one gradient is calculated for the critic's network and it's not multiplied by the second gradient?

with tf.GradientTape() as tape:

actions = actor_model(state_batch)

critic_value = critic_model([state_batch, actions])

# Used `-value` as we want to maximize the value given

# by the critic for our actions

actor_loss = -tf.math.reduce_mean(critic_value)

actor_grad = tape.gradient(actor_loss, actor_model.trainable_variables)

actor_optimizer.apply_gradients(zip(actor_grad, actor_model.trainable_variables))