I have two sets of data, one training and one test set. I use the train set to train the deep q network model variant. I also continuously evaluate the agent Q values obtained on the test set every 5000 epochs I find that the agent Q values on the test set do not converge and neither do the policies.

iteration $x$: Q values for the first 5 test data are [15.271439, 13.013742, 14.137051, 13.96463, 11.490129] with policies: [15, 0, 0, 0, 15]

iteration $x+10000$:

Q values for the first 5 test data are [15.047309, 15.5233555, 16.786497, 16.100864, 13.066223] with policies: [0, 0, 0, 0, 15]

This means that the weights of the neural network are not converging. Although I can manually test each policy at each iteration and decide which of the policy performs best, I would like to know if correct training of the network would lead to weight convergence ?

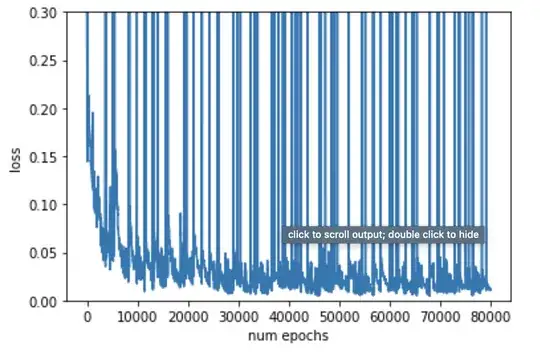

You can see that the loss decreases over time however, there are occasional spikes in the loss which does not seem to go away.