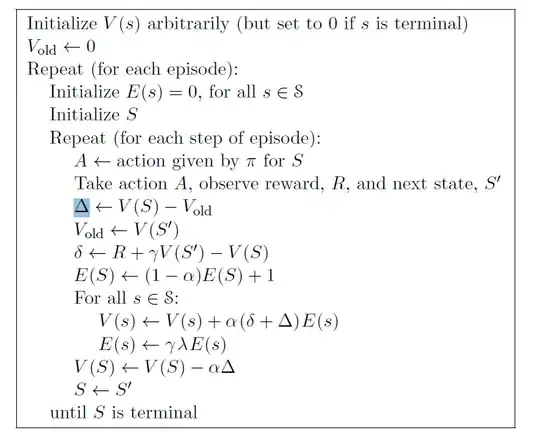

In the RL textbook by Sutton & Barto section 7.4, the author talked about the "True online TD($\lambda$)". The figure (7.10 in the book) below shows the algorithm.

At the end of each step, $V_{old} \leftarrow V(S')$ and also $S \leftarrow S'$. When we jump to next step, $\Delta \leftarrow V(S') - V(S')$, which is 0. It seems that $\Delta$ is always going to be 0 after step 1. If that is true, it does not make any sense to me. Can you please elaborate on how $\Delta$ is updated?