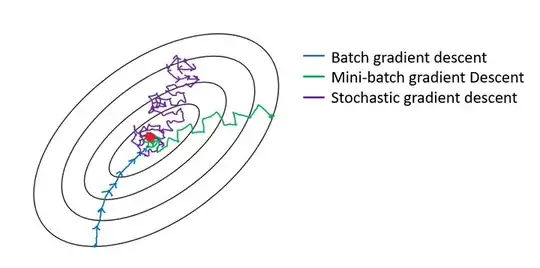

SGD is able to jump out of local minima that would otherwise trap BGD

I don't really understand the above statement. Could someone please provide a mathematical explanation for why SGD (Stochastic Gradient Descent) is able to escape local minima, while BGD (Batch Gradient Descent) can't?

P.S.

While searching online, I read that it has something to do with "oscillations" while taking steps towards the global minima. What's that?