The main difference between a Bayesian network and a Markov chain is not that a Markov Chain is not directional, it is that the graph of the Bayesian network is not trivial whereas the graph of a Markov chain would be somewhat trivial, as all the previous $k$ nodes would just point to the current node. To illustrate further why this would be trivial, we let each node represent a random variable $X_i$. Then the nodes representing $X_i$ for $ t-k \leq i < t$ would be connected by a directed edge to $X_t$. That is, the edges $(X_i, X_t) \in E$ for $ t-k \leq i < t$ and where $E$ is the set of edges of the graph.

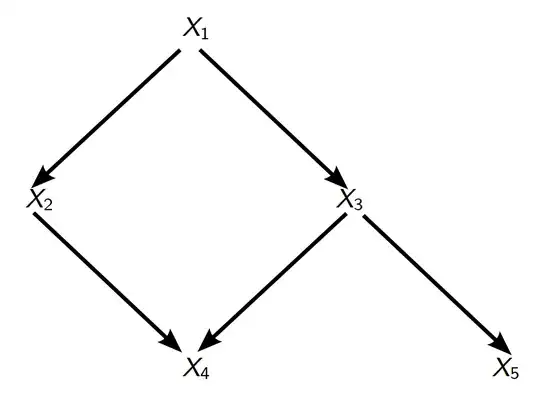

To illustrate this please see the examples below.

Assume that we have a $k$th order Markov chain, then by definition we have $\forall t > k$ $\mathbb{P}(X_t = x | X_0,...,X_{t-1}) = \mathbb{P}(X_t = x | X_{t-k},...,X_{t-1})$.

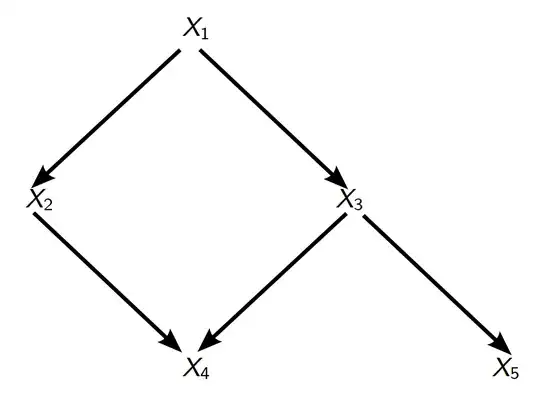

The main difference between the above definition and the definition of a Bayesian Network is that due to the direction of the graph we can have different dependencies for each $X_t$. Consider the Bayesian Network in the Figure below

We would get that $\mathbb{P}(X_4 = x| X_1, X_2, X_3) = \mathbb{P}(X_4 = x | X_2, X_3)$ and $\mathbb{P}(X_5 = x| X_1, X_2, X_3, X_4) = \mathbb{P}(X_5 = x | X_3)$.

So, the past events that the current random variable depends on don't have to have the same 'structure' in a Bayesian Network as in a Markov Chain.