I am new in the field of Machine Learning so I wanted to start of by reading more about mathematics and history behind it.

I am currently reading, in my opinion, a very good and descriptive paper on Statistical Learning Theory - "Statistical Learning Theory: Models, Concepts, and Results". In section 5.5 Generalization bounds, it states that:

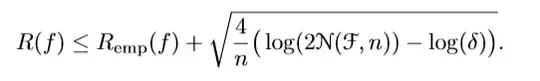

It is sometimes useful to rewrite (17) "the other way round". That is, instead of fixing $\epsilon$ and then computing the probability that the empirical risk deviates from the true risk by more than $\epsilon$, we specify the probability with which we want the bound to hold, and then get a statement which tells us how close we can expect the risk to be to the empirical risk. This can be achieved by setting the right-hand side of (17) equal to some $\delta > 0$, and then solving for $\epsilon$. As a result, we get the statement that with a probability at least $1−\delta$, any function $f \in F$ satisfies

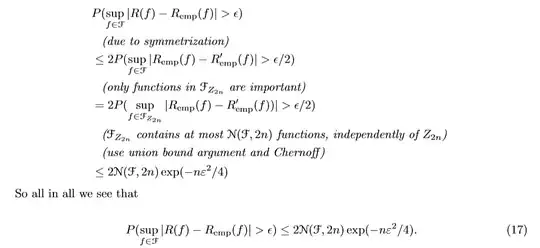

Equation (17) is VC Symmetrization lemma to which we applied union bound and then Chernoff bound:

What I fail to understand is the part where we are rewriting (17) "the other way around". I fail to grasp intuitive understanding of relation between (17) and (18), as well as understanding generalization bounds in general.

Could anyone help me with understanding these concepts or at least provide me with additional resources (papers, blog posts, etc.) that can help?