The accepted answer does not provide a good definition of over-fitting, which actually exists and is a defined concept in reinforcement learning too. For example, the paper Quantifying Generalization in Reinforcement Learning completely focuses on this issue. Let me give you more details.

Over-fitting in supervised learning

In supervised learning (SL), over-fitting is defined as the difference (or gap) in the performance of the ML model (such as a neural network) on the training and test datasets. If the model performs significantly better on the training dataset than on the test dataset, then the ML model has over-fitted the training data. Consequently, it has not generalized (well enough) to other data other than the training data (i.e. the test data). The relationship between over-fitting and generalization should now be clearer.

Over-fitting in reinforcement learning

In reinforcement learning (RL) (you can find a brief recap of what RL is here), you want to find an optimal policy or value function (from which the policy can be derived), which can be represented by a neural network (or another model). A policy $\pi$ is optimal in environment $E$ if it leads to the highest cumulative reward in the long run in that environment $E$, which is often mathematically modelled as a (partially or fully observable) Markov decision process.

In some cases, you are also interested in knowing whether your policy $\pi$ can also be used in a different environment than the environment it has been trained in, i.e. you're interested in knowing if the knowledge acquired in that training environment $E$ can be transferred to a different (but typically related) environment (or task) $E'$. For example, you may only be able to train your policy in a simulated environment (because of resource/safety constraints), then you want to transfer this learned policy to the real world. In those cases, you can define the concept of over-fitting in a similar way to the way we define over-fitting in SL. The only difference may be that you may say that the learned policy has over-fitted the training environment (rather than saying that the ML model has over-fitted the training dataset), but, given that the environment provides the data, then you could even say in RL that your policy has over-fitted the training data.

Catastrophic forgetting

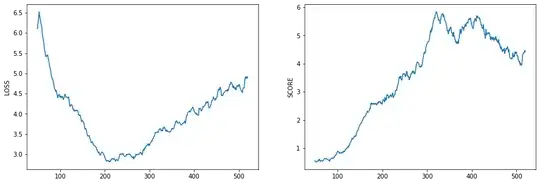

There is also the issue of catastrophic forgetting (CF) in RL, i.e., while learning, your RL agent may forget what it's previously learned, and this can even happen in the same environment. Why am I talking about CF? Because what it's happening to you is probably CF, i.e., while learning, the agent performs well for a while, then its performance drops (although I have read a paper that strangely defines CF differently in RL). You could also say that over-fitting is happening in your case, but, if you are continuously training and the performance changes, then CF is probably what you need to investigate. So, you should reserve the word over-fitting in RL when you're interested in transfer learning (i.e. the training and test environments do not coincide).