I am currently studying Deep Learning by Goodfellow, Bengio, and Courville. In chapter 5.1.2 The Performance Measure, P, the authors say the following:

The choice of performance measure may seem straightforward and objective, but it is often difficult to choose a performance measure that corresponds well to the desired behavior of the system.

In some cases, this is because it is difficult to decide what should be measured. For example, when performing a transcription task, should we measure the accuracy of the system at transcribing entire sequences, or should we use a more fine-grained performance measure that gives partial credit for getting some elements of the sequence correct? When performing a regression task, should we penalize the system more if it frequently makes medium-sized mistakes or if it rarely makes very large mistakes? These kinds of design choices depend on the application.

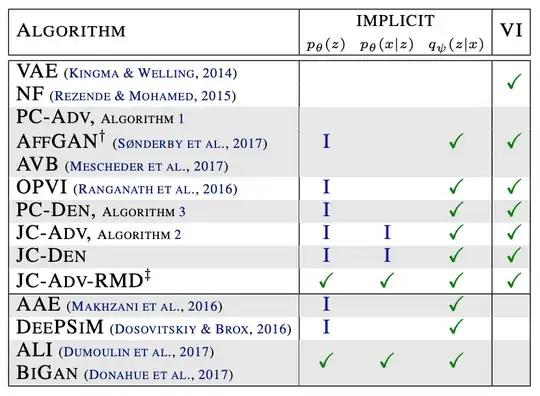

In other cases, we know what quantity we would ideally like to measure, but measuring it is impractical. For example, this arises frequently in the context of density estimation. Many of the best probabilistic models represent probability distributions only implicitly. Computing the actual probability value assigned to a specific point in space in many such models is intractable. In these cases, one must design an alternative criterion that still corresponds to the design objectives, or design a good approximation to the desired criterion.

It is this part that interests me:

Many of the best probabilistic models represent probability distributions only implicitly.

I don't have the experience to understand what this means (what does it mean to represent distributions "implicitly"?). I would greatly appreciate it if people would please take the time to elaborate upon this.