Is there any need to use a non-linear activation function (ReLU, LeakyReLU, Sigmoid, etc.) if the result of the convolution layer is passed through the sliding window max function, like max-pooling, which is non-linear itself? What about the average pooling?

1 Answers

Let's first recapitulate why the function that calculates the maximum between two or more numbers, $z=\operatorname{max}(x_1, x_2)$, is not a linear function.

A linear function is defined as $y=f(x) = ax + b$, so $y$ linearly increases with $x$. Visually, $f$ corresponds to a straight line (or hyperplane, in the case of 2 or more input variables).

If $z$ does not correspond to such a straight line (or hyperplane), then it cannot be a linear function (by definition).

Let $x_1 = 1$ and let $x_2 \in [0, 2]$. Then $z=\operatorname{max}(x_1, x_2) = x_1$ for all $x_2 \in [0, 1]$. In other words, for the sub-range $x_2 \in [0, 1]$, the maximum between $x_1$ and $x_2$ is a constant function (a horizontal line at $x_1=1$). However, for the sub-range $x_2 \in [1, 2]$, $z$ correspond to $x_2$, that is, $z$ linearly increases with $x_2$. Given that max is not a linear function in a special case, it can't also be a linear function in general.

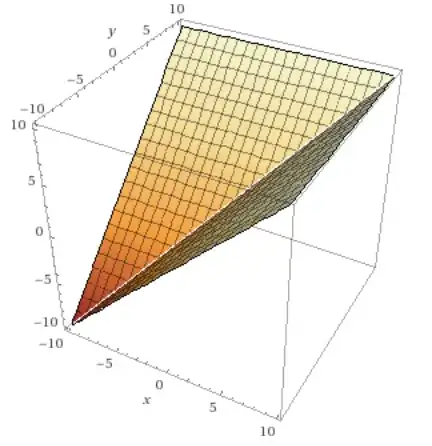

Here's a plot (computed with Wolfram Alpha) of the maximum between two numbers (so it is clearly a function of two variables, hence the plot is 3D).

Note that, in this plot, both variables, $x$ and $y$, can linearly increase, as opposed to having one of the variables fixed (which I used only to give you a simple and hopefully intuitive example that the maximum is not a linear function).

In the case of convolution networks, although max-pooling is a non-linear operation, it is primarily used to reduce the dimensionality of the input, so that to reduce overfitting and computation. In any case, max-pooling doesn't non-linearly transform the input element-wise.

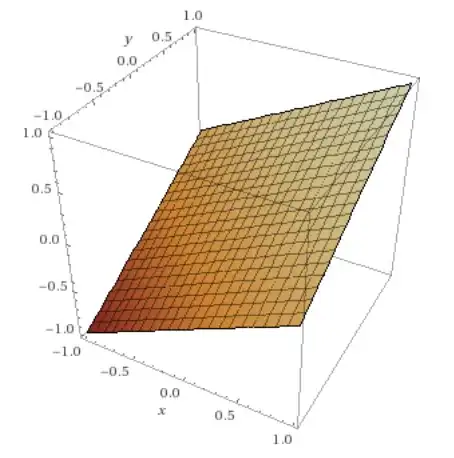

The average function is a linear function because it linearly increases with the inputs. Here's a plot of the average between two numbers, which is clearly a hyperplane.

In the case of convolution networks, the average pooling is also used to reduce the dimensionality.

To answer your question more directly, the non-linearity is usually applied element-wise, but neither max-pooling nor average pooling can do that (even if you downsample with a $1 \times 1$ window, i.e. you do not downsample at all).

Nevertheless, you don't necessarily need a non-linear activation function after the convolution operation (if you use max-pooling), but the performance will be worse than if you use a non-linear activation, as reported in the paper Systematic evaluation of CNN advances on the ImageNet (figure 2).

- 42,615

- 12

- 119

- 217