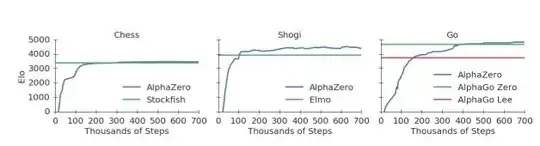

In training a neural network, you often see the curve showing how fast the neural network is getting better. It usually grows very fast then slows down to almost horizontal.

Is there a mathematical formula that matches these curves?

Some similar curves are:

$$y=1-e^{-x}$$

$$y=\frac{x}{1+x}$$

$$y=\tanh(x)$$

$$y=1+x-\sqrt{1+x^2}$$

Is there a theoretical reason for this shape?