In OpenAI Gym "reward" is defined as:

reward(float): amount of reward achieved by the previous action. The scale varies between environments, but the goal is always to increase your total reward.

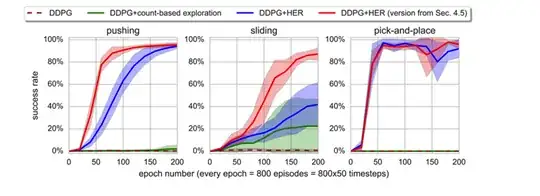

I am training Hindsight Experience Replay on Fetch robotics environments, where rewards are sparse and binary indicating whether or not the task is completed. The original paper implementing HER uses success rate as a metric in its plots, like so:

On page 5 of the original paper, it is stated that the reward is binary and sparse.

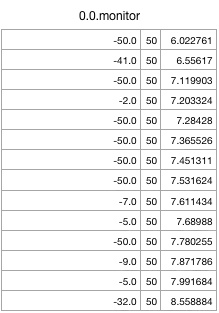

When I print the rewards obtained during a simulation of FetchReach-v1 trained with HER, I get the following values. The first column shows the reward and the second column shows the episode length.

As can be seen, at every time step, I am getting a reward, sometimes I get a $-1$ reward at every time step throughout the episode for a total of $-50$. The maximum reward I can achieve throughout the episode is $0$.

Therefore my question is: What is the reward obtained at each time-step? What does it represent and how is this different from the success rate?