I don't really understand what this equation is saying or what the purpose of the ELBO is. How does it help us find the true posterior distribution?

2 Answers

From this document, as you found here, $X$ is an observed variable and $Z$ is a hidden variable; $p(X)$ is the density function of $X$. The posterior distribution of the hidden variables can then be written as follows using the Bayes’ Theorem:

$$p(Z|X) = \frac{p(X|Z)p(Z)}{p(X)} = \frac{p(X|Z)p(Z)}{\int_Zp(X,Z)}$$

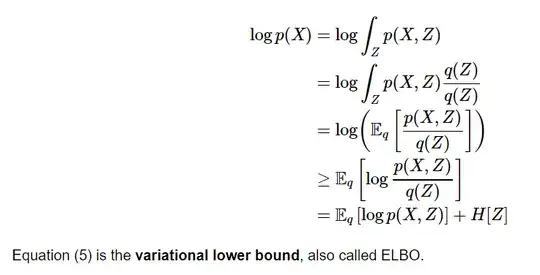

Now base on what you post, if we denote that $L= \mathbb{E}_q [\log p(X, Z)] + H[Z]$ ($q(Z)$ is a distribution we use to approximation the true posterior distribution $p(Z|X)$ in VB and $H[Z] = -\mathbb{E}_q [\log q(Z)]$), then it is obvious that $L$ is a lower bound of the log probability of the observations. As a result, if in some cases we want to maximize the marginal probability (the log probability of the observations), we can instead maximize its variational lower bound $L$. As a real example, you can follow the "Multiple Object Recognition with Visual Attention" example in the referenced document.

Moreover, the term $L$ will be presented in KL-divergence that will be used to measuring the similarity of two distributions. Be aware that there is progress on the bound in this paper (Fixing a Broken ELBO).

- 1,866

- 12

- 19

The use of KL provides a more intuitive way of what the ELBO is attempting to maximize.

Basically, we want to find a posterior approximation such that $p(z\mid x) \approx q(z)\in\mathcal{Q}$

$$KL(q(z)\parallel p(z\mid x)) \rightarrow \min_{q(z)\in\mathcal{Q}}$$

As a result of this, while finding this optimal posterior approximation, we maximize the probability of all the observed data $x$. Note that the evidence is usually intractable. Thus, can express the $KL$ as follows:

\begin{align*} \log p(x) &= \int q(z) \log p(x)dz \\ &= \int q(z) \log\frac{p(x,\theta)}{p(\theta\mid x)}dz \\ &= \int q(z) \log\frac{p(x,z)q(z)}{p(\theta\mid x)q(z)}dz\\ &= \int q(z) \log\frac{p(x,z)}{q(z)}dz + \int q(z) \log\frac{q(z)}{p(z\mid x)}dz \\ &= \mathcal{L}(q(z)) + KL(q(z)\parallel p(z\mid x)) \end{align*}

In this case, KL just gives us the difference between $q$ and $p$. We want to make this difference close to zero meaning that $q=p$. So, minimizing the KL is the same as maximizing the ELBO, and as a result, we obtain the lower bound in your expression. If you expand your bound, you can find a nice interpretion:

$$ \begin{align*} \mathcal{L}(q(z)) &= \int q(z) \log\frac{p(x,z)}{q(z)}dz \\ &= \mathbb{E}_{q(z)} \log p(x\mid z) - KL(q(z)\parallel p(z)) \end{align*} $$

When we optimize this expression, we want to find a $q$ that fits our data properly and also is really close to true posterior. Thus, $\mathbb{E}_{q(z)} \log p(x\mid z)$ act as a data term and $KL(q(z)\parallel p(z)) $ as a regularizer.

- 21

- 2