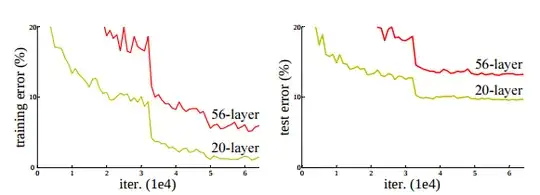

My understanding of the vanishing gradient problem in deep networks is that as backprop progresses through the layers the gradients become small, and thus training progresses slower. I'm having a hard time reconciling this understanding with images such as below where the losses for a deeper network are higher than for a shallower one. Should it not just take longer to complete each iteration, but still reach the same level if not higher of accuracy?

2 Answers

In theory, deeper architectures can encode more information than shallower ones because they can perform more transformations of the input which results in better results at the output. The training is slower because back propagation is quite expensive, as you increase the depth, you increase the number of parameters and gradients that need to be computed.

Another issue that you need to take into account is the effect of the activation function. Saturating functions like sigmoid and hyperbolic tangent result in very small gradients in their edges, other activation functions are just flat, eg. ReLU is flat on the negatives therefore, there is no error to propagate because the gradient is either very small (as in saturating functions) or zero. Batch Norm greatly assists in this operation because it collapses values in better ranges where the gradients aren't close to zero.

- 171

- 8

Those graphs do not disprove your 'vanishing gradient' theory. The deeper network may eventually do better than the shallower one, but it might take much longer to do it.

Incidentally, the ReLU activation function was designed to mitigate the vanishing gradient problem.

- 603

- 5

- 12