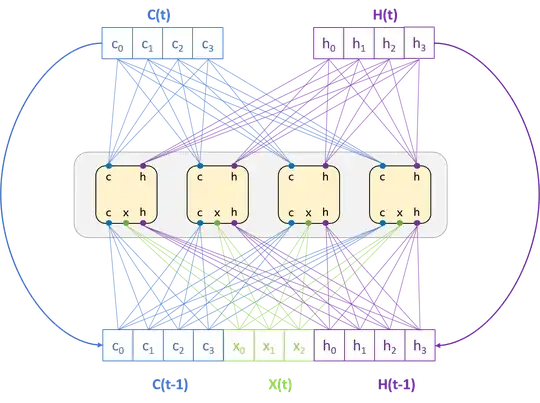

I was following some examples to get familiar with TensorFlow's LSTM API, but noticed that all LSTM initialization functions require only the num_units parameter, which denotes the number of hidden units in a cell.

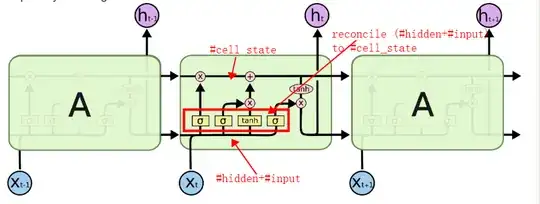

According to what I have learned from the famous colah's blog, the cell state has nothing to do with the hidden layer, thus they could be represented in different dimensions (I think), and then we should pass at least 2 parameters denoting both #hidden and #cell_state.

So, this confuses me a lot when trying to figure out what the TensorFlow's cells do. Under the hood, are they implemented like this just for the sake of convenience or did I misunderstand something in the blog mentioned?