MaxPooling pools together information. Imagine you have 2 convolutional layers $(F_1, F_2)$ respectively, each with a 3x3 kernel and a stride of $1$. Also, imagine your input is $I$ is of shape $(w,h)$. Let's call a max-pooling layer $M$ is of size $(2,2)$.

Note: I'm ignoring channels because, for these purposes, it's not necessary and can be extended to any amount of them.

Now you have two cases:

- $O_1 = F_2 \circ F_1 \circ I$

- $O_2 = F_2 \circ M \circ F_1 \circ I$

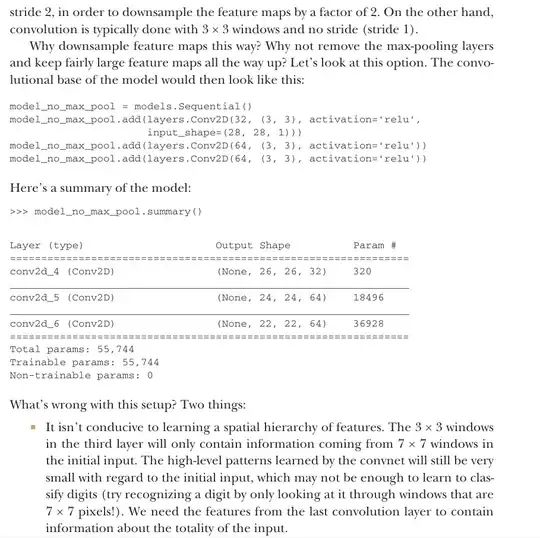

In these cases, $shape(O_1)=(w-4, h-4)$ and $shape(O_2)=\left(\frac{w-2}{2}-2, \frac{h-2}{2}-2 \right)$. If we plug in dummy values, like $w,h = 64,64$, we get the shapes become $(60,60)$ and $(29,29)$ respectively. As you can tell, these are very different!

Now, there is more of a difference than just the size of the outputs, each neuron holds a pooling of more information. Let's do it out:

Each output neuron of $F_1 \circ I$ has information from a $(3,3)$ receptive field.

Each output neuron then of $F_2 \circ F_1 \circ I$ has information from a $(3,3)$ receptive field of $F_1 \circ I$, which, if we eliminate reused nodes, is a $(5,5)$ receptive field from the initial $I$.

Each output neuron then of $M \circ F_1 \circ I$ has information from a $(2,2)$ receptive field of $F_1 \circ I$, which, if we eliminate reused nodes, is a $(4,4)$ receptive field from the initial $I$.

Each output neuron then of $F_2 \circ M \circ F_1 \circ I$ has information from a $(4,4)$ receptive field of $M \circ F_1 \circ I$, which, if we eliminate reused nodes, is a $(8,8)$ receptive field from the initial $I$.

So, let us discuss these: Using max-pooling reduces the feature space heavily by throwing out a lot of nodes whose features aren't as indicative (makes training models more tractable) along with it does extend the receptive field with no additional parameters.