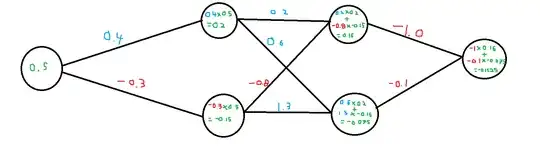

Simon Krannig's answer provides the math notation behined exactly what is going on, but since you still seem a bit confused, I've made a visual representation of a neural network using only weights with no activation function. See below:

So I'm fairly sure it as you suspected: At each neuron, you take the sum of the inputs of the previous layer multiplied by the weight that connects that specific input to said neuron, where each input has its own unique weight for every one of its outgoing connections.

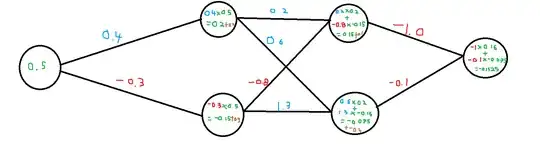

With a bias, you would do the exact same math as shown in the above image, but once you find the final value (0.2, -0.15, 0.16 and -0.075, the output layer doesn't have a bias) you would add the bias to the total value. So see below for an example including a bias:

NOTE I did not update the outputs at each layer to include the bias because I can't be bothered redrawing this in paint. Just know that the final value for all the nodes with the brown bias haven't carried over to the next layer.

Then, if you were to include an activation function, you would finally take your value and put it through. So including the bias', looking at node 1 of layer 2, it would be (lets pretend your activation function is a sigmoid):

sigmoid((0.4*0.5)+0.2)

and for layer 3 node 2:

sigmoid(((0.6*0.2)+(1.3*-0.15))-0.4)

That is how you would do a forward pass of a simple neural network.