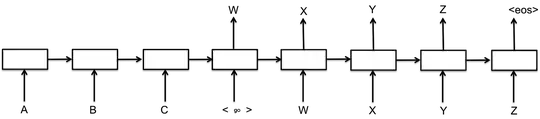

In seq2seq they model the joint distribution of whatever char/word sequence by decomposing it into time-forward conditionals:

\begin{align*}

p(w_1,w_2, \dots,w_n) &= \ p(w_1)*p(w_2|w_1) * \ ... \ * p(w_n|w_1, \dots,w_{n-1}) \\

&= \ p(w_1)*\prod_{i=2}^{n}p(w_i|w_{<i})

\end{align*}

This can be sampled by sampling each of the conditional in ascending order. So, that's exactly what they're trying to imitate. You want the second output dependant on the sampled first output, not its distribution.

This is why the hidden state is NOT good for modeling this setup because it is a latent representation of the distribution, not a sample of the distribution.

Note: In training, they use ground-truth as input by default because it working under the assumption the model should've predicted the correct word, and, if it didn't, the gradient of the word/char level loss will reflect that (this is called teacher forcing and has a multitude of pitfalls).