According to Wikipedia Artificial general intelligence(AGI)

Artificial general intelligence (AGI) is the intelligence of a (hypothetical) machine that could successfully perform any intellectual task that a human being can.

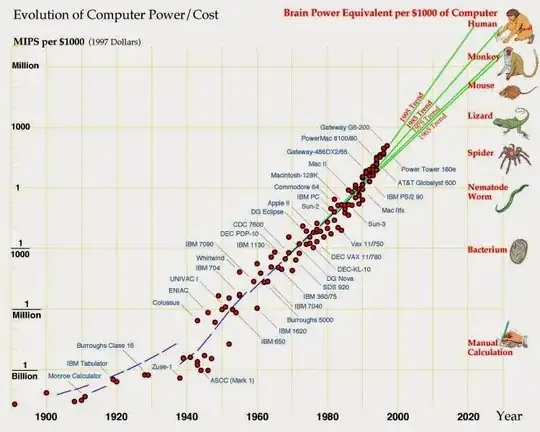

According to the below image (a screenshot of a picture from When will computer hardware match the human brain? (1998) by Hans Moravec, and Kurzweill also uses this diagram in his book The Singularity Is Near: When Humans Transcend Biology), today's artificial intelligence is the same as that of lizards.

Let's assume that within 10-20 years, we, humans, are successful in creating an AGI, that is, an AI with human-level intelligence and emotions.

At that point, could we destroy an AGI without its consent? Would this be considered murder?