I am running a basic DQN (Deep Q-Network) on the Pong environment. Not a CNN, just a 3 layer linear neural net with ReLUs.

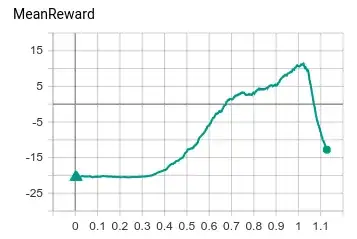

It seems to work for the most part, but at some point, my model suffers from catastrophic performance loss:

What is really the reason for that?

What are the common ways to avoid this? Clipping the gradients? What else?

(Reloading from previous successful checkpoints feels more like a hack, rather than a proper solution to this issue.)