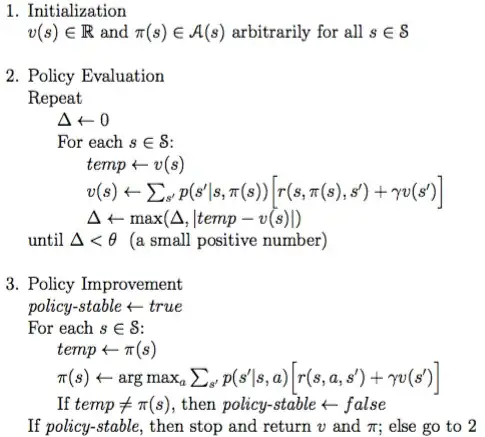

Consider the grid world problem in RL. Formally, policy in RL is defined as $\pi(a|s)$. If we are solving grid world by policy iteration then the following pseudocode is used:

My question is related to the policy improvement step. Specifically, I am trying to understand the following update rule.

$$\pi(s) \leftarrow arg max_a \sum_{s'}p(s'|s,a)[r(s,a,s') + \gamma v(s')] $$

I can have 2 interpretations of this update rule.

In this step, we check which action (say, going right for a particular state) has the highest reward and assign going right a probability of 1 and rest actions a probability of 0. Thus, in PE step, we will always go right for all iterations for that state even if it might not be the most rewarding function after certain iterations.

We keep the policy improvement step in mind, and, while doing the PE step, we update the $v(s)$, based on the action giving highest reward (say, for 1st iteration $k=0$, going right gives the highest reward, we update based on that, while, for $k=1$, we see going left gives the highest reward, and update our value based on that likewise. Thus action changes depending on maximum reward).

For me, the second interpretation is very similar to value iteration. So, which one is the correct interpretation of a policy iteration?