Where does this type of data arise?

In terms of learning on non-Euclidean data, it was probably first coined by prof. Bronstein here. Recently, prof. Bronstein published, along with other top authors in the field, a book about geometric deep learning, which in essence presents a unified mathematical framework for symmetries-based learning, and exemplifies the extension of concepts from classical ML/DL to the domain of higher-dimensional geometric domains (chapter 4).

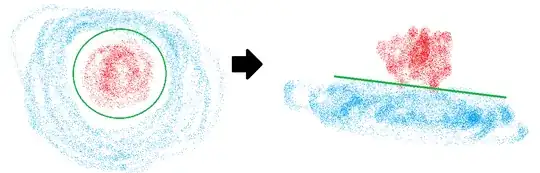

Apparently, graphs and manifolds are non-Euclidean data. Why exactly is that the case?

According to the unified mathematical framework presented in prof. Bronstein's book, the blueprint of geometric DL consists of an underlying domain $\Omega$ and signals $\mathcal{X}(\Omega)$ and functions $\mathcal{F}(\mathcal{X}(\Omega))$.

The domain is the geometric/algebraic structure behind any instance of that data type (basically shows how its composing features are arranged). Associated with this domain is a symmetry group (all the transformations under which the domain is invariant).

The signal is the observable state/value/quantity/features in the points of the domain.

The function(s) are the blocks/layers that we want to build as functions of the signals on the domain. Here is where we can inject inductive biases.

Translation equivariance is a property of the domain of images (among other groups). Convolutions as functions of a signal on the domain $R^2$ are translation equivariant, meaning that if you move an object in an image, you would still find the same features (at different locations) when applying the same kernel over the two images. This is the (geometric prior) "inductive bias", as the function used is aware of the domain, knows its properties, and uses them.

The underlying domain of an image is $\mathbb{R}^2$ (the position of the pixels in the (x, y) plane). In this context, one can say that the signal of an is simply the colour/intensity of the pixels. As pointed out in this answer, this group admits the Euclidean distance between two points in the plane as $d(x, y) = \sqrt{\sum_{i=0}^{1} (x_i - y_i)^2}$.

In broad terms, non-Euclidean data is data whose underlying domain does not obey Euclidean distance as a metric between points in the domain. For visualization simplicity, think of $\mathbb{Z}^2$ instead, which can be seen as a "grid" of integer-valued points separated by a distance of 1. You can easily "visualize" the distance between the points of the domain.

Now let's move to graphs. What is the underlying domain of the graph? It's a set of nodes $\mathcal{V}$ and a set of edges $\mathcal{E}$. There is no such thing as distance in the Euclidean sense between the nodes of a graph. If you ask "What is the distance between point A and B?", the answer is probably a function of the connectivity of the graph (which is part of the domain), and is not the same for arbitrary points in the graph.

How would a dataset of non-Euclidean data look like?

Usually, a data point (sample) consists of a domain and a signal.

- The domain can be for example a weighted adjacency matrix of the graph, which lists the "distances" between nodes, for those that exist.

- Features can be either per node, per edge or both.

In a concrete example, the domain can be a road network graph with distances and connectivity between road intersections, and the signal can be per-node features indicating how many cars are in the intersection at a given point.

Of course, the domain can also be different between data points (i.e. in the PROTEINS dataset, where each protein is a graph connected (edges) aminoacids (nodes), with different structures).

A non-Euclidean dataset simply consists of multiple such data points, which may or may not have the same underlying domain (i.e. same graph structure defined by an adjacency matrix).