Linear algebra (Osnabrück 2024-2025)/Part II/Lecture 32

- Orthogonality

When an inner product is given, then we can express the property of two vectors to be orthogonal to each other.

Let denote a vector space over , endowed with an inner product . We say that two vectors are orthogonal (or perpendicular) to each other if

The following reasoning shows that the orthogonality, defined via the inner product, corresponds to the intuitively given orthogonality .[1] For orthogonal vectors , we get that has the same distance to the points and . This holds because of

The reverse statement holds as well; see Exercise 32.1 .

Let us recall the Theorem of Pythagoras.

The following theorem is the Theorem of Pythagoras; more precisely, it is the version in the context of an inner product, and it is trivial. However, the relation to the classical, elementary-geometric Theorem of Pythagoras is difficult, because it is not clear at all whether our concept of orthogonality and our concept of a length, both introduced via the inner product, coincide with the corresponding intuitive concepts. That our concept of a norm is the true length concept rests itself on the Theorem of Pythagoras in a Cartesian coordinate system, which presupposes the classical theorem.

Let be a -vector space, endowed with an inner product . Let be vectors that are orthogonal to each other. Then

We have

Let be a -vector space, endowed with an inner product, and let denote a linear subspace. Then

The orthogonal complement of a linear subspace is again a linear subspace; see Exercise 32.8 . If a generating system of is given, then a vector belongs to the orthogonal complement of if it is orthogonal to all the vectors of the generating system; see Exercise 32.9 .

Let , endowed with the standard inner product. For the one-dimensional linear subspace , generated by the standard vector , the orthogonal complement consists of all vectors , where the -th entry is . For a one-dimensional linear subspace , generated by a vector

the orthogonal complement can be found by determining the solution space of the linear equation

The orthogonal space

has dimension ; this is a so-called hyperplane. The vector is called a normal vector for the hyperplane .

For a linear subspace that is given by a basis (or a generating system) , , the orthogonal complement is the solution space of the system of linear equations

where is the matrix formed by the vectors as rows.

- Orthonormal bases

Let be a -vector space, endowed with an inner product. A basis , , of is called an orthogonal basis if

Let be a -vector space, endowed with an inner product . A basis , , of is called an orthonormal basis if

The elements in an orthonormal basis have norm , and they are orthogonal to each other. Hence, an orthonormal basis is a orthogonal basis, that also satisfies the norm condition

It is easy to transform an orthogonal basis to an orthonormal basis, by replacing every by its normalization (as is part of a basis, its norm is not ). A family of vectors, all of the norm , and orthogonal to each other, but not necessarily a basis, is called an orthonormal system.

Let be a -vector space, endowed with an inner product, and let , , be an orthonormal basis of . Then the coefficients of a vector , with respect to this basis, are given by

Since we have a basis, there exists a unique representation

(where all are up to finitely many). Therefore, the claim follows from

We will mainly consider orthonormal bases in the finite-dimensional case. In , the standard basis is an orthonormal basis. In the plane , any orthonormal basis is of the form or of the form , where

holds. For example, is an orthonormal basis. The following Gram–Schmidt orthonormalization describes a method to construct, starting with any basis of a finite-dimensional vector space, an orthonormal basis that generates the same

flag

of linear subspaces.

Let be a finite-dimensional -vector space, endowed with an inner product, and let be a basis of . Then there exists an orthonormal basis of with[2]

for all

.We prove the statement by induction over , that is, we construct successively a family of orthonormal vectors spanning the same linear subspaces. For , we just have to normalize , that is, we replace it by . Now suppose that the statement is already proven for . Let a family of orthonormal vectors fulfilling be already constructed. We set

Due to

this vector is orthogonal to all , and also

holds. By normalizing , we obtain .

Let be the kernel of the linear mapping

As a linear subspace of , carries the induced inner product. We want to determine an orthonormal basis of . For this, we consider the basis consisting of the vectors

We have ; therefore,

is the corresponding normed vector. According to[3] orthonormalization process, we set

We have

Therefore,

is the second vector of the orthonormal basis.

Let be a finite-dimensional -vector space, endowed with an inner product. Then there exists an orthonormal basis

of .This follows directly from Theorem 32.9 .

Therefore, in a finite-dimensional vector space with an inner product, one can always extend a given orthonormal system to an orthonormal basis; see

exercise *****.

Let be a finite-dimensional -vector space, endowed with an inner product, and let denote a linear subspace. Then, we have

that is, is the direct sum of and its

orthogonal complement.From we get directly

thus . This means that the sum direct. Let be an orthonormal basis of . We extend it to an orthonormal basis of . Then we have

Therefore, is the sum of the linear subspaces.

For the following statement, compare also

Lemma 15.6

and

exercise *****.

Let be a -vector space, endowed with an inner product

. Then the following statements hold.- For a

linear subspace

,

we have

- We have and .

- Let be

finite-dimensional.

Then we have

- Let be finite-dimensional. Then we have

Proof

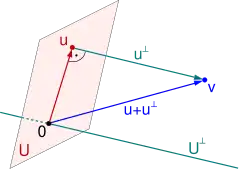

- Orthogonal projections

For a finite-dimensional -vector space, endowed with an inner product, and a linear subspace , there exists an orthogonal complement , and the space has, according to Corollary 32.12 , the direct sum decomposition

The projection

along is called the orthogonal projection onto . This projection depends only on , because the orthogonal complement is uniquely determined. Often, the composed mapping is also called the orthogonal projection onto . An orthogonal projection is also described as dropping a perpendicular onto .

Let be a finite-dimensional -vector space, endowed with an inner product, and let denote a linear subspace with an orthonormal basis of . Then the orthogonal projection onto is given by

We extend the basis to an orthonormal basis of . The orthogonal complement of is

Due to Lemma 32.8 , we have

Therefore, is the projection onto along .

- Footnotes

- ↑ For this, one has to accept that the length defined via the inner product coincides with the intuitively defined length, which rests on the elementary-geometric Theorem of Pythagoras.

- ↑ Here, denotes the linear subspace spanned by the vectors, not the inner product.

- ↑ Often, it is computationally easier, first to orthogonalize and then to normalize at the very end; see example *****.

| << | Linear algebra (Osnabrück 2024-2025)/Part II | >> PDF-version of this lecture Exercise sheet for this lecture (PDF) |

|---|